NOTE: This article is relevant for Delphi 10.4.2 and earlier.

It is now a requirement to support Billing API v3 on Android which means an update in Delphi 11 has changed this.

Fundamentally, the same principles apply to the updated Android IAP source files, but I will update this blog to cover Delphi 11+ as soon as I can.

The In-App Purchase support in Delphi is pretty well written and supports Consumable and Non-Consumable out-of-the-box.

Unfortunately, subscriptions aren’t supported by the built-in libraries but the good new is that it isn’t difficult to change the Firemonkey source files to make it work.

This tutorial takes you through the changes needed, along with how to check your subscription status and then how to get through Apple’s rigorous App Store Review process to actually get it onto the store.

If you aren’t already familiar with how to set up in-app purchases in general within your Delphi app, read these links:

http://docwiki.embarcadero.com/RADStudio/Tokyo/en/Using_the_iOS_In-App_Purchase_Service

http://docwiki.embarcadero.com/RADStudio/Tokyo/en/Using_the_Google_Play_In-app_Billing_Service

Pre-requisite: This tutorial isn’t a 101-how to use in-app purchases, and assumes that you’ve followed the above docwiki tutorials (which are really easy to use!) and are familiar with the basics.

Note: This tutorial is based on editing the 10.1 Berlin version of the files. I seriously doubt Embarcadero have updated them in Tokyo 10.2, but please check before making these changes just in case. Also note that you’ll need Professional or higher editions to make these changes as Starter doesn’t include the Firemonkey source files.

Some Background Reading…

The Android Subscription IAP reference is here:

https://developer.android.com/google/play/billing/billing_subscriptions.html

The iOS IAP references are here:

https://developer.apple.com/library/content/documentation/NetworkingInternet/Conceptual/StoreKitGuide/Chapters/Subscriptions.html

It iOS Receipt Validation Guides are here:

https://developer.apple.com/library/content/releasenotes/General/ValidateAppStoreReceipt/Introduction.html#//apple_ref/doc/uid/TP40010573

Let’s get started!

There are 2 workflows you need to add to your app in order to support subscriptions correctly:

- Purchase workflow

- Subscription status workflow (including activating or deactivating features accordingly)

The purchase workflow is fairly straightforward, but required you to make some changes to the in-built IAP classes provided with Delphi to support it.

The subscription status management workflow can be quite tricky as you’re required to check and handle everything yourself, but with some careful thought about this before starting to code, you should be able to offer a solid solution in your app which doesn’t create a jarring user experience.

Note: I’ll explain how to get access to the subscription status information but how you implement this within your own app logic is totally your choice. Some prefer to do the checking and feature expiry/activation on app load, others in different ways, so I won’t be offering any guidance around this in the tutorial as it will be different for every app.

1. Changes for the Purchase workflow

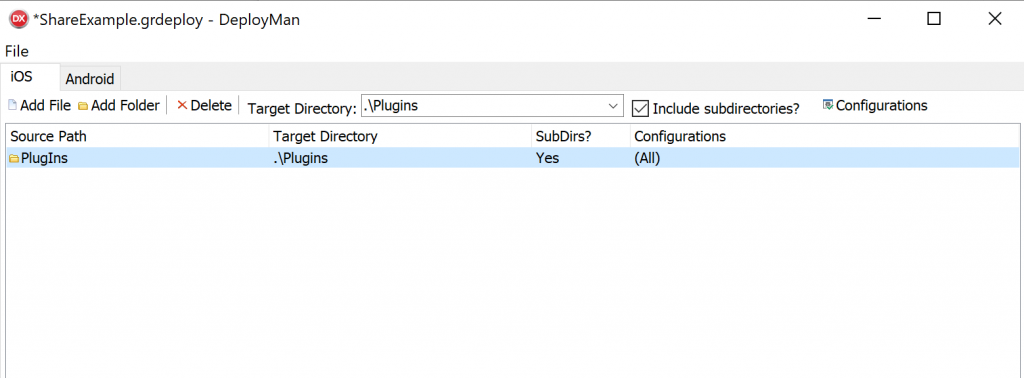

To start, we’re going to need to edit the following files:

- FMX.InAppPurchase.pas

- FMX.InAppPurchase.iOS.pas

- FMX.InAppPurchase.Android.pas

These reside in the “Source\fmx” folder of your Delphi installation (on Berlin 10.1 this may be: c:\program files(x86)\embarcadero\studio\18.0\source\fmx)

Copy these files into your project folder, but don’t drag them into your project tree within the IDE. Just having them in your project folder will be enough for the compiler to find them and use them in preference to the system versions of the files.

FMX.InAppPurchase.pas:

We’re going to extend the base classes to add a few extra functions which you’ll need in order to produce a decent subscription experience for your users.

Note: in native iOS land, the calls for purchasing a subscription and consumable/non-consumable items is the same – it’s determined automatically by the bundle ID passed in. However, to provide a common interface that’s cross-platform, we added a separate call as you’ll see below that works for Android too.

In FMX.InAppPurchase.pas, make the following changes:

Within IFMXInAppPurchaseService, find:

procedure PurchaseProduct(const ProductID: string);

Add the following below it:

procedure PurchaseSubscription(const ProductID: string);

function GetProductToken(const ProductID: String): String;

function GetProductPurchaseDate(const ProductID: String): TDateTime;

Within TCustomInAppPurchase, search for the PurchaseProduct function and add the same new function headers as above.

Implement the new functions of TCustomInAppPurchase and copy the following code:

procedure TCustomInAppPurchase.PurchaseSubscription(const ProductID: string);

begin

CheckInAppPurchaseIsSetup;

if FInAppPurchaseService <> nil then

FInAppPurchaseService.PurchaseSubscription(ProductID);

end;

function TCustomInAppPurchase.GetProductPurchaseDate(const ProductID: String): TDateTime;

begin

if FInAppPurchaseService <> nil then

Result := FInAppPurchaseService.GetProductPurchaseDate(ProductID)

else

Result:=EncodeDate(1970,1,1);

end;

function TCustomInAppPurchase.GetProductToken(const ProductID: String): String;

begin

if FInAppPurchaseService <> nil then

Result := FInAppPurchaseService.GetProductToken(ProductID)

else

Result:='';

end;

FMX.InAppPurchase.iOS.pas:

Within TiOSInAppPurchaseService, find:

procedure PurchaseProduct(const ProductID: string);

Add the following below it:

procedure PurchaseSubscription(const ProductID: string);

function GetProductToken(const ProductID: String): String;

function GetProductPurchaseDate(const ProductID: String): TDateTime;

Implement these new functions using the code below:

procedure TiOSInAppPurchaseService.PurchaseSubscription(const ProductID: string);

begin

Self.PurchaseProduct(ProductID);

end;

function TiOSInAppPurchaseService.GetProductPurchaseDate(const ProductID: String): TDateTime;

begin

Result:=EncodeDate(1970,1,1);

end;

function TiOSInAppPurchaseService.GetProductToken(const ProductID: String): String;

begin

Result:='';

end;

As you can see, these are mostly dummy functions for iOS as this information isn’t available through the APIs, but is on Android.

So how do you get the purchase date and product token for this purchase? That’s where the receipt validation comes in! iOS doesn’t have native API calls to get this information about a subscription (unlike Android) so your app will need to cache the original purchase information at time of purchase, then rely on the receipt mechanism to update this cache at regular intervals in future.

I’ll go into this workflow later in the tutorial.

FMX.InAppPurchase.Android.pas:

Within TAndroidInAppPurchaseService, find:

procedure PurchaseProduct(const ProductID: string);

Add the following below it:

procedure PurchaseSubscription(const ProductID: string);

function GetProductToken(const ProductID: String): String;

function GetProductPurchaseDate(const ProductID: String): TDateTime;

Implement these new functions using the code below:

procedure TAndroidInAppPurchaseService.PurchaseSubscription(const ProductID: string);

begin

CheckApplicationLicenseKey;

//Do NOT block re-purchases of products or users won't be able to

//re-purchase expired subscription. Also makes it harder to test!

//if IsProductPurchased(ProductID) then

// raise EIAPException.Create(SIAPAlreadyPurchased);

//The docs say it must be called in the UI Thread, so...

CallInUiThread(procedure

begin

FHelper.LaunchPurchaseFlow(ProductID, TProductKind.Subscription, InAppPurchaseRequestCode,

DoPurchaseFinished, FTransactionPayload);

end);

end;

function TAndroidInAppPurchaseService.GetProductPurchaseDate(const ProductID: String): TDateTime;

var

Purchase: TPurchase;

begin

Result:=EncodeDate(1970,1,1);

if FInventory.IsPurchased(ProductID) then

begin

Purchase:=FInventory.Purchases[ProductID];

if Purchase <> nil then

Result:=Purchase.PurchaseTime;

end;

end;

function TAndroidInAppPurchaseService.GetProductToken(const ProductID: String): String;

var

Purchase: TPurchase;

begin

Result:='';

if FInventory.IsPurchased(ProductID) then

begin

Purchase:=FInventory.Purchases[ProductID];

if Purchase <> nil then

Result:=Purchase.Token;

end;

end;

With these changes, you’ll be able to access the most recent transactionID for a product purchase (useful to display in a purchase history UI) and the last purchase date.

There are a few “bug fixes” you need to apply within the Android unit too:

procedure TInventory.AddPurchase(const Purchase: TPurchase);

begin

//For subscription it must replace or it'll keep the old transactionID

if IsPurchased(Purchase.Sku) then

ErasePurchase(Purchase.Sku);

FPurchaseMap.Add(Purchase.Sku, Purchase);

end;

The original code caches the transaction ID and dates internally, so whenever a subscription is re-purchased (e.g. during testing or if the user changes their mind about cancelling a subscription) it won’t keep the new transaction ID. The above change fixes this by replacing the purchase details.

And also:

procedure TAndroidInAppPurchaseService.DoPurchaseFinished(const IabResult: TIabResult; const Purchase: TPurchase);

begin

if IabResult.IsSuccess then

begin

//If we've a payload on the purchase, ensure it checks out before completing

if not Purchase.DeveloperPayload.IsEmpty then

begin

if not DoVerifyPayload(Purchase) then

begin

DoError(TFailureKind.Purchase, SIAPPayloadVerificationFailed);

Exit;

end;

end;

FInventory.AddPurchase(Purchase);

//These two are swapped to match the event call order of iOS

DoRecordTransaction(Purchase.Sku, Purchase.Token, Purchase.PurchaseTime);

DoPurchaseCompleted(Purchase.Sku, True);

end

else

DoError(TFailureKind.Purchase, IabResult.ToString);

end;

This fix is important as the Android code calls RecordTransaction and PurchaseComplete in a different order to iOS for some reason, which makes it hard to write logic which handles the subscription code easily.

It’s worth mentioning how the TransactionID and PurchaseDate behave, so you can decide how best to use them:

Non-Consumable Purchases:

Both are the ORIGINAL purchase date and transactionID for the purchase.

Subscriptions:

Both are the MOST RECENT re-purchase date and transactionID for the subscription. When a subscription is automatically renewed, a new transactionID is generated and the purchase date will update to reflect when it was renewed.

For Android, you can then use the purchase date to work out the expiry date for your user experience. On iOS, you need to use the receipt to get this.

Note: There is much better and recommended way to get the latest status of your user purchases, which is to listen to Real Time Developer Notifications (RTDN) and App Store Server Notification on your server and update the purchase records on your own server.

This is more work and outside the scope of this tutorial, but for a more robust and accurate way to handle subscriptions, you really should look into doing this. It also allows you to detect subscription upgrades/downgrades, paused and resumed subscriptions (Android) and more situations that you can’t get from just the app-level APIs.

For details, take a look here:

https://developer.android.com/google/play/billing/getting-ready

https://developer.android.com/google/play/billing/rtdn-reference

https://developer.apple.com/documentation/storekit/original_api_for_in-app_purchase/subscriptions_and_offers/enabling_app_store_server_notifications

At this point, you will be able to purchase subscriptions for Android and iOS through the updated API. This is only the first part of the challenge! Now we need to check for expiry…

2. Checking Subscription Status

Subscription status checking isn’t as easy as the one-call approach to purchasing, as there aren’t any API calls to give you this information to the level of detail you’ll need.

To get details about the user’s subscription you can do 1 of 2 things depending on the level of detail you require:

1. Just call .IsProductPurchased() on the IAP class.

This will return true/false but may not be as reliable as you think. There as known cases where the store returns “yes” when the subscription has actually been cancelled or expired. This also won’t tell you when the subscription is due to expire so should only used used as a high level check.

2. Use Receipt Validation (iOS) or subscribe to Real-Time Developer Notifications (Android).

This will provide you with real up-to-date information about the subscription, when it’s expected to expire, what really happened to it, why and when.

Note: RTDN (Android) MUST be done from your server as it uses a webhook back to your server – effectively poking your server when something has changed, that you can then use to update your back-end records about the subscription.

For Receipt Validation, this CAN be done from within your app, but Apple recommend you do this from your server too.

Checking for Expiry

The Quick Way – iOS or Android

Using IsProductPurchased() may be enough if all your want to do is disable a feature if the store thinks that the user’s subscription is no longer active.

Subscriptions can be reported as inactive in the following situations:

- The subscription has expired and isn’t auto-renewable

- The subscription renewal payment could not be taken (and any payment grace period has expired)

- The subscription was cancelled by the user, so wasn’t auto-renewed

- The subscription was made on another device, or the app has been re-installed, and the user hasn’t yet done a Restore Purchases (iOS only).

Note: this isn’t the recommended way to check for subscription expiry, as there are too many ways it can report the status falsely, which can lead to you removing features from paying users – which users don’t tend to like!

Also, you may have problem retaining users as the basic system described above has no way to detect when someone has cancelled the renewal of their subscription, or if they’re in a trial period, having billing issues or other cases where you may way to provide some UI to try to persuade or help the user to stay on your subscription.

The BETTER way – Android

Google offer a push-based notification system, which tells you when something changes about a subscription so you can update your records and act accordingly.

If you have a server on which you keep a record of your user’s purchases, then you can create relevant end points in your server API which can be called by Google’s servers when the status of a subscription changes. This gives you a LOT more information, especially about why it’s no longer active, but will only work if your server keeps a record or has a way to push an IAP status change to your app (e.g. using Push Notifications).

These are called Real Time Developer Notifications and there are instructions in the relevant section here:

https://developer.android.com/google/play/billing/billing_subscriptions.html#realtime-notifications

Along with Developer RTN, your server can also link to the Google Developer APIs which give you the ability to ask Google directly for details about your users purchases (by purchase token). This approach gives you a lot of details about the purchase, it’s status, reason why it was cancelled or has expired, billing retry, trial periods etc.

It’s worth pointing out that there is a chance your server won’t pick up all the RTDN messages for lots of legitimate reasons, or may not be able to process them all in time before your app asks for the latest status.

This is what makes implementing the full subscription workflow so complicated – and not just for Delphi, for any app. Google’s advice is to also use Google Developer APIs to supplement and double-check the server records you’re keeping.

It’s quite a complex implementation requiring you to create and link to a Google Cloud API, set up Pub/Sub and link your Play account but if you’re up for the challenge, it’s a great solution. For details, take a look here:

https://developers.google.com/android-publisher

The BETTER way – iOS

For iOS, Apple offer a receipt validation REST API which you can call to get full details about the purchases made by the user.

Details of how this works is here:

https://developer.apple.com/library/content/releasenotes/General/ValidateAppStoreReceipt/Chapters/ValidateRemotely.html#//apple_ref/doc/uid/TP40010573-CH104

This requires you to send a binary-encoded version of the user’s purchase receipt to their server, along with your API key (generated in the iTunes Connect page where you created your subscription iAP). In return you’ll receive a large JSON object which is the unencrypted version of the receipt with everything you need to know about all purchases the user has made within your app.

The above link gives you an idea of the structure you can expect and what the receipt fields include (and format) so you can process these.

But what is the user purchase receipt and where can I find it?

When a user makes a purchase, a receipt of this is added to a receipt file which is encrypted with the user’s key. The receipt file is stored in the sandbox for the app, so if the app is deleted, so is the receipt file.

That’s great, but if the user re-installs the app, the receipt won’t be there!

This is where the Restore Purchases facility comes in, and why you MUST implement it in your app for iOS. When the user restores their purchases, the app is given a new receipt file, which it then stores in the app sandbox.

It’s this receipt file that is used when you query IsProductPurchased() which is why, if you don’t call Restore Purchases, your app will clam that your user doesn’t have the purchases they’ve paid for.

To find your receipt file within your app, do the following:

mainBundle:=TiOSHelper.MainBundle;

receiptUrl:=mainBundle.appStoreReceiptURL;

urlAsString:=NSStrToStr(receiptUrl.path);

You will need to include the following in your uses:

iOSapi.Foundation,

iOSapi.Helpers,

Macapi.Helpers;

Note: You can’t just read this file and get what you need from it, as it’s encrypted against the user’s private key, so you will need to send it to Apple’s validation server, which will decrypted and send you the ready-to-use JSON version of the receipt.

A Delphi-based example of how you may choose to read the file and send to Apple is below:

if TFile.Exists(urlAsString) then</p>

begin

streamIn:=TFileStream.Create(urlAsString, fmOpenRead);

streamOut:=TStringStream.Create;

try

TNetEncoding.Base64.Encode(streamIn, streamOut);

receiptBase64:=streamOut.DataString.Replace(#10, '', [rfReplaceAll])

.Replace(#13, '', [rfReplaceAll]);

if urlAsString.Contains('sandbox') then<

baseUrl = 'https://sandbox.itunes.apple.com/'

else

baseUrl = 'https://buy.itunes.apple.com/';

RESTRequest1.Method:=TRestRequestMethod.rmPost;

RESTClient1.BaseURL:=baseUrl;

RESTRequest1.Resource:='verifyReceipt';

RESTRequest1.AddBody('{"receipt-data": "'+receiptBase64+'", '+

' "password": "'+cSecretKey+'"}');

RESTRequest1.Execute;

finally

streamIn.Free;

streamOut.Free;

end;

end;

NOTE: Apple suggest you don’t do this call from within your app, and instead should do it from your server – though it’s the same process.

cSecretKey is the shared secret that you need to generate within the iTunesConnect page of your subscription IAP product. It’s only required if you have auto-renewing subscriptions within your app.

In our app’s implementation, we base64 encrypt the receipt file as shown above, but instead of sending directly to Apple, we POST it to our server instead, which then sends onto Apple, and can decode and process the purchase receipt as required in our app’s behalf.

The Resulting Receipt Data

The response you get back from Apple should be the JSON data of the receipt, which is in the “receipt” field of the JSON response.

However, if an error has occurred, the “status” field will tell you what went wrong, so you’re best to test this before assuming the receipt field is valid. A status of “0” means that all went well and your receipt field has been populated. Anything else is an error which you can look up the meaning for in here:

https://developer.apple.com/library/content/releasenotes/General/ValidateAppStoreReceipt/Chapters/ValidateRemotely.html#//apple_ref/doc/uid/TP40010573-CH104

Tip: The most common errors are 21002 which means that your receipt data couldn’t be decoded and may not have been ready correctly from the file. Occasionally it will return this even if your data is correct – maybe if you’ve run a receipt check call twice in quick succession from your app.

The other we had to begin with was 21007 which means you’ve accidentally sent a sandbox receipt file to the live URL or vice-versa.

A Note about Environments

The App Store has 2 areas which affect in-app purchases – Sandbox and Live.

When in Sandbox mode (i.e. when you’re logged into the App Store using a Sandbox User account created through the iTunesConnect portal), you won’t be charged for any purchases made, and subscriptions will have an artificially shortened lifespan so you can test expiry conditions much more easily.

The full receipt validation sandbox URL is:

https://sandbox.itunes.apple.com/verifyReceipt

Note about sandbox subscriptions: Once you’ve purchased a subscription using a specific sandbox account, it will renew 5-6 times (once per hour) then expire. Once expired, you can’t make another purchase of the same subscription under that account, so if you want to test again, you’ll need to create a new sandbox account in iTC.

It’s a bit of a pain, but just what you have to do!

When in Live mode (i.e. you’re logged in with a live AppleID – NOT recommended during testing), all purchases will be charged for, so best to do this once you’re happy your code works as a final test.

The full receipt validation live URL is:

https://buy.itunes.apple.com/verifyReceipt

A Note about Receipts…

As mentioned earlier, receipts are pulled onto local storage when you restore purchases, but when handling subscription you are likely to want refresh the receipt quite regularly to make sure you have the latest information (such as expiry dates).

There is nothing in the InAppPurchase libraries which do this (the receipt refresh when calling RestorePurchases is built into the Apple SDKs), however, it’s not difficult to do:

//Import the necessary missing calls from StoreKit SDK

SKReceiptRefreshRequestClass = interface(SKRequestClass)

['{903AA321-6DC7-49D8-88C0-B393C563958B}']

end;

SKReceiptRefreshRequest = interface(SKRequest)

['{B26069D9-26BA-4BF4-9ECB-B9AD4B2A4AEE}']

function initWithReceiptProperties(properties: NSDictionary)

: Pointer; cdecl;

function receiptProperties: NSDictionary; cdecl;

end;

TSKReceiptRefreshRequest = class

(TOCGenericImport<SKReceiptRefreshRequestClass, SKReceiptRefreshRequest>)

end;

procedure TPurchaseReceiptManager.RefreshReceipt(OnComplete: TProc);

var

request: SKReceiptRefreshRequest;

begin

request:=TSKReceiptRefreshRequest.Wrap(

TSKReceiptRefreshRequest.Alloc.initWithReceiptProperties(nil));

if request <> nil then

request.start;

end;

A note on the above code – it will make a call to ask Apple for the latest receipt for that user from their store purchases, but it may take a moment for the actual receipt file to refresh and be available.

An easy (if basic) solution would be to call the above in a thread, sleep for a couple of seconds then call the code to get the receipt.

A much better solution would be to create an SKRequestDelegate-implementing class which you can pass to SKReceiptRefreshRequest.delegate. This will then tell you when your refresh has completed.

For a similar example to see how you can do this in Delphi, take a look at TiOSProductsRequestDelegate in the EMB sources (FMX.InAppPurchase.iOS.pas).

3. Getting App-Store Ready

So now you should be able to offer your users the ability to purchase a subscription, and get details about it’s expiry, cancellation etc in order to manage access to your feature.

You may now think that you’re able to implement the purchase UX in your app however you choose… but I’m afraid it’s not that simple.

Google seem fairly lenient on the requirements, as long as you make it clear what the user is buying and for how much. Apple on the other hand, have strict requirements for how you present your purchase facility and what details you show to the user about the subscription prior to them purchasing it.

And be under no illusions – Apple will reject your app if you don’t have EXACTLY the right things in your app they’re looking for.

However, they aren’t being deliberately awkward, they simply want to make sure the subscriber isn’t under any illusion as to what they’re buying, how and when they’ll be charged and in what capacity.

When we first submitted our app for review, it was rejected on a few points that at first baffled us. The rejection from Apple was:

We noticed that your App did not fully meet the terms and conditions for auto-renewing subscriptions, as specified in Schedule 2, Section 3.8(b).

Please revise your app to include the missing information. Adding the above information to the StoreKit modal alert is not sufficient; the information must also be listed somewhere within the app itself, and it must be displayed clearly and conspicuously.

Eventually we working it out thanks to a combination of the posts below, but to make life easier, I’ve included the specific requirements below:

https://forums.developer.apple.com/thread/74227

https://support.magplus.com/hc/en-us/articles/203808548-iOS-Tips-Tricks-Avoiding-Apple-Rejection

The key things to focus on are:

- You need very specific wording in both your purchase page for the subscription, and also within the App details text on the store listing. The latter confused us as Apple kept saying it must be in the iAP description but that can only take a 30 characters! We eventually realised they were referring to the app store listing itself.

- The purchase page for your subscription MUST include a link to your Privacy Policy and Terms.

- It’s recommended that you offer a direct way to access the subscription management portal from within your app. The URLs for these are:

For iOS:

https://buy.itunes.apple.com/WebObjects/MZFinance.woa/wa/manageSubscriptions

For Android:

https://play.google.com/store/account/

The wording you need to include against your subscription in the in-app purchase page is as follows. Note that this is just a guide and you may be asked by Apple to add extra details based on your specific subscription or content type.

1. This subscription offers ……………………………….

Make this as descriptive as possible. e.g. if you offer different subscription levels, then mention it.

2. The subscription includes a free trial period of …. which begins as soon as you purchase the subscription. You can cancel your subscription at any time during this period without being charged as long as you do so within 24 hours of the end of trial period.

3. Payment will be charged to iTunes account at confirmation of purchase

4. Your subscription will automatically renew unless auto-renew is turned off at least 24-hour prior to the end of the current period

5. Your account will be charged for renewal within 24-hours prior to the end of the current period, and identify the cost of the renewal

6. Your subscriptions can be managed by the user and auto-renewal may be turned off by going to the user’s Account Settings after purchase.

7. No cancellation of the current subscription is allowed during the active subscription period

8. Privacy Policy link goes here

And there we have it! The above should be all you need to implement support for auto-renewable subscriptions in your Firemonkey Android and iOS app.

9. Terms of Use link goes here.

We hope you found this useful. Happy coding!

Final Notes and Food for Thought…

Subscription implementation is quite complex, but the good news is that it’s the same problem regardless of which tools you use for your app and back-end. It’s the logic that’s complex because of the sheer range of different use-cases you might need to deal with.

My suggestion on this is to Google how others have implemented their subscription management logic and go with whichever is comprehensive enough for your situation. You don’t need to do a perfect job of supporting every case, but the more you can handle, the more likely you are to keep users subscribed and paying you.

Here are a few area you need to look into, and try to support if possible:

- Users upgrading / downgrading between different types of subscription in a subscription group (e.g. switching from Annual to Monthly)

- Family Sharing (iOS) – this is where one person in a family group buys a subscription or in-app purchase and allows others in their defined family group to get access to them. Good news is that the receipt validation should pick this up properly, but it’s worth reading up on it and testing it yourself.

- Billing issues, Re-try periods and grace periods – these are where a payment couldn’t be taken but the user has been given some time to get it fixed before you take their subscribed features from them.

You can detect this from the receipt / RTDN / Google Developer API calls so you can provide an appropriate API.

- Server-Side Purchase History – you can try to do everything from within your app, and in some ways the logic is easier, but it is less secure so both Apple and Google ask you not to do this. Validating receipts, talking to the Apple/Google servers to validate things – none of them will protect you from common man-in-the-middle attacks so best to implement your own server which you push purchase info to and use as your single source of truth. This is basically what both Apple and Google Recommend. It also allows you to use RTDN to keep those up-to-date.

- Cancellation and Expiry – make sure you test these cases properly! Not just so you can remove features when needed, but also to avoid removing then re-activating features unnecessarily between expiry/renew periods if there’s a small gap due to server records not being updated on time!

In all, it’s a mine-field but if you get it working it’s a very worthwhile way to make some real money from your app.